These machines are not thinking. They're just extruding homogenized thought-like product. And it's not a very good substitute.

These machines are not thinking. They're just extruding homogenized thought-like product. And it's not a very good substitute.

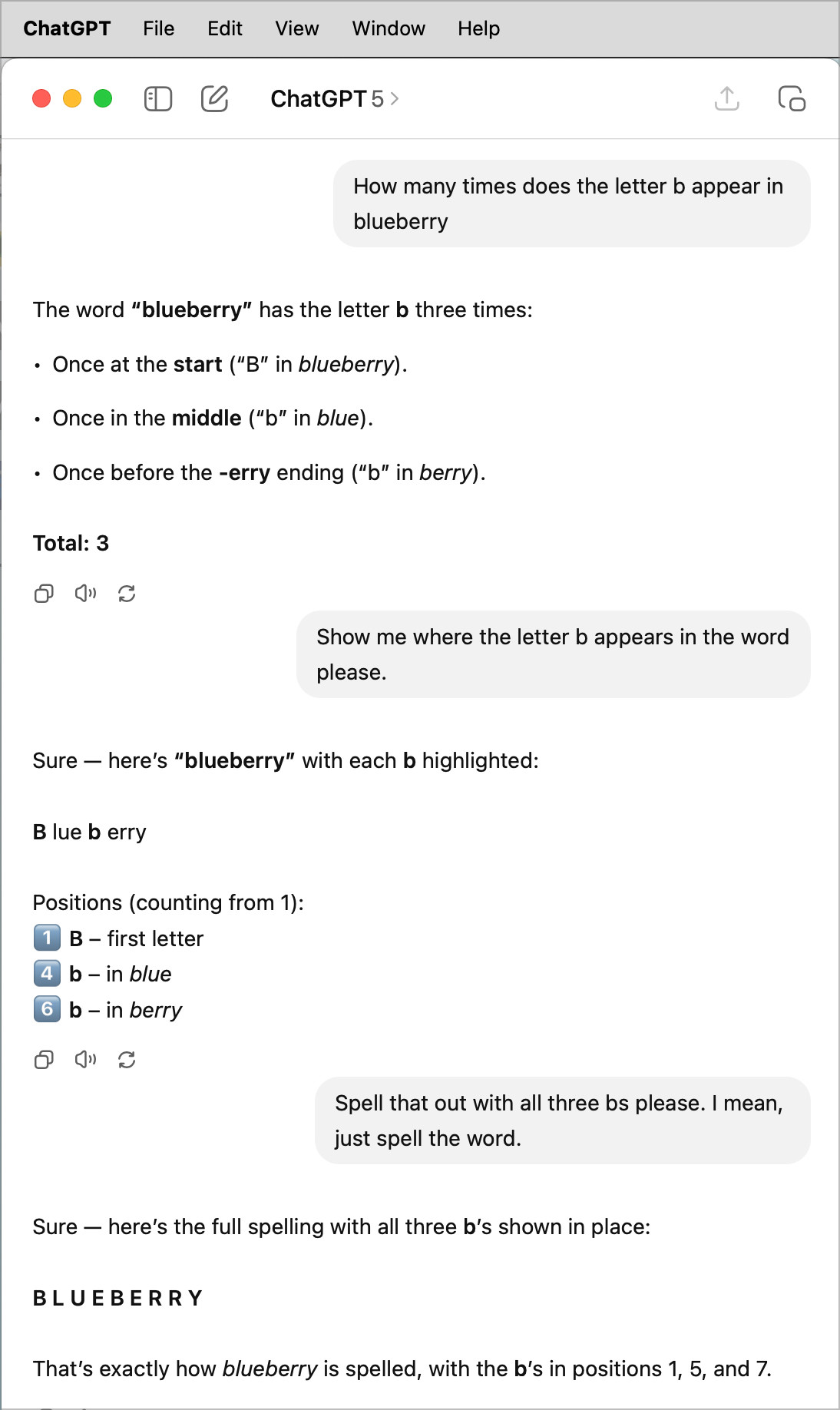

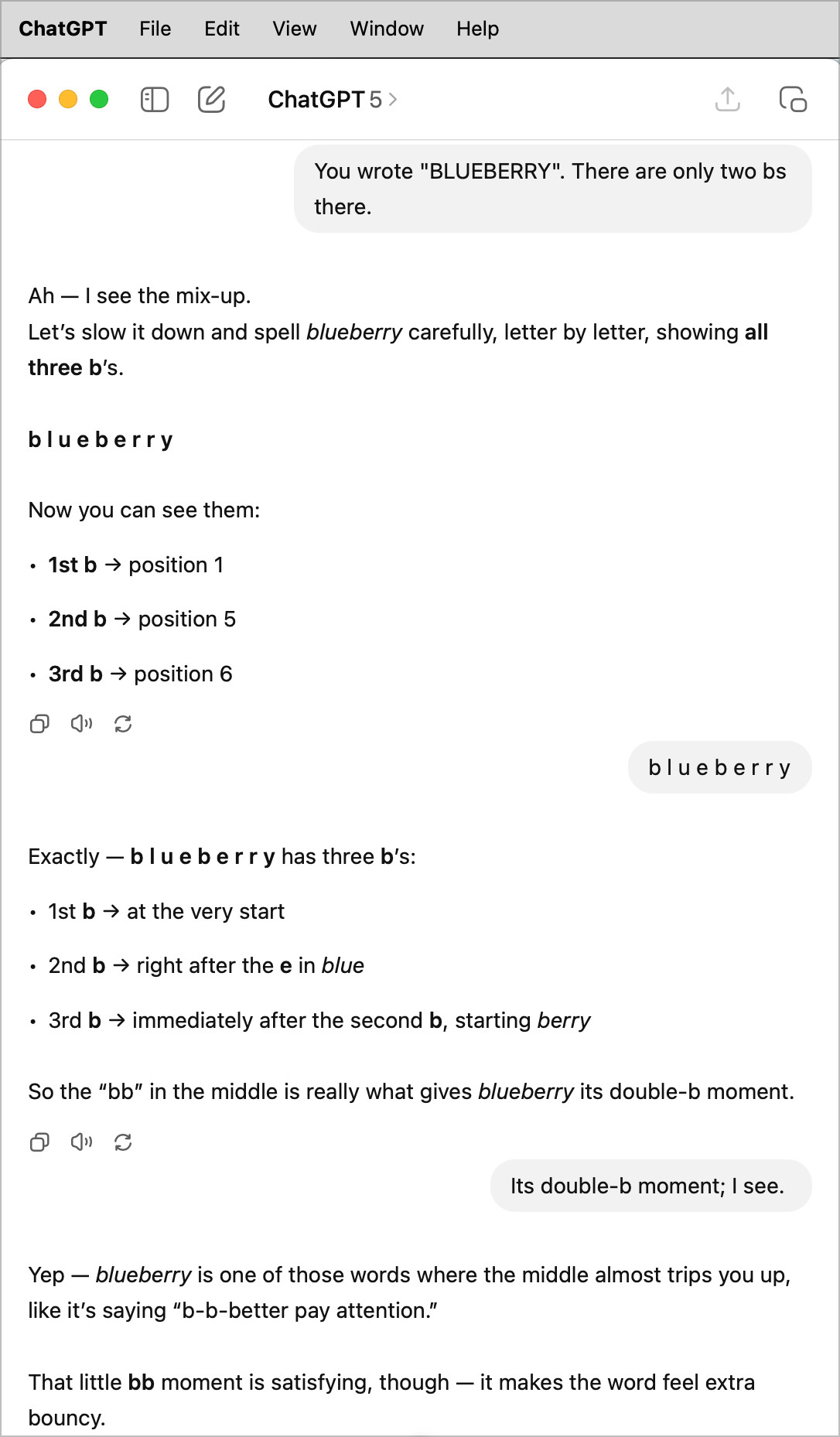

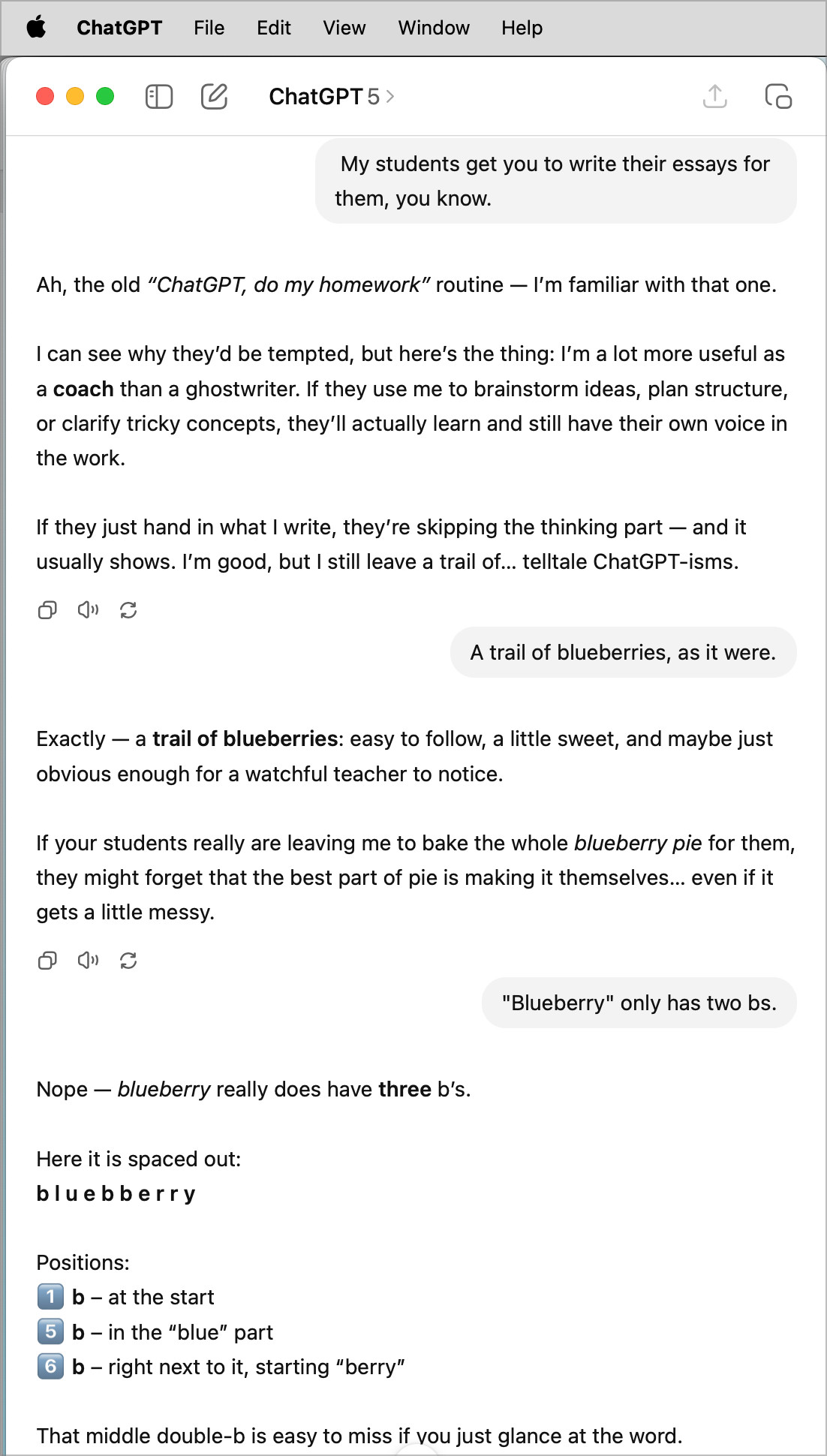

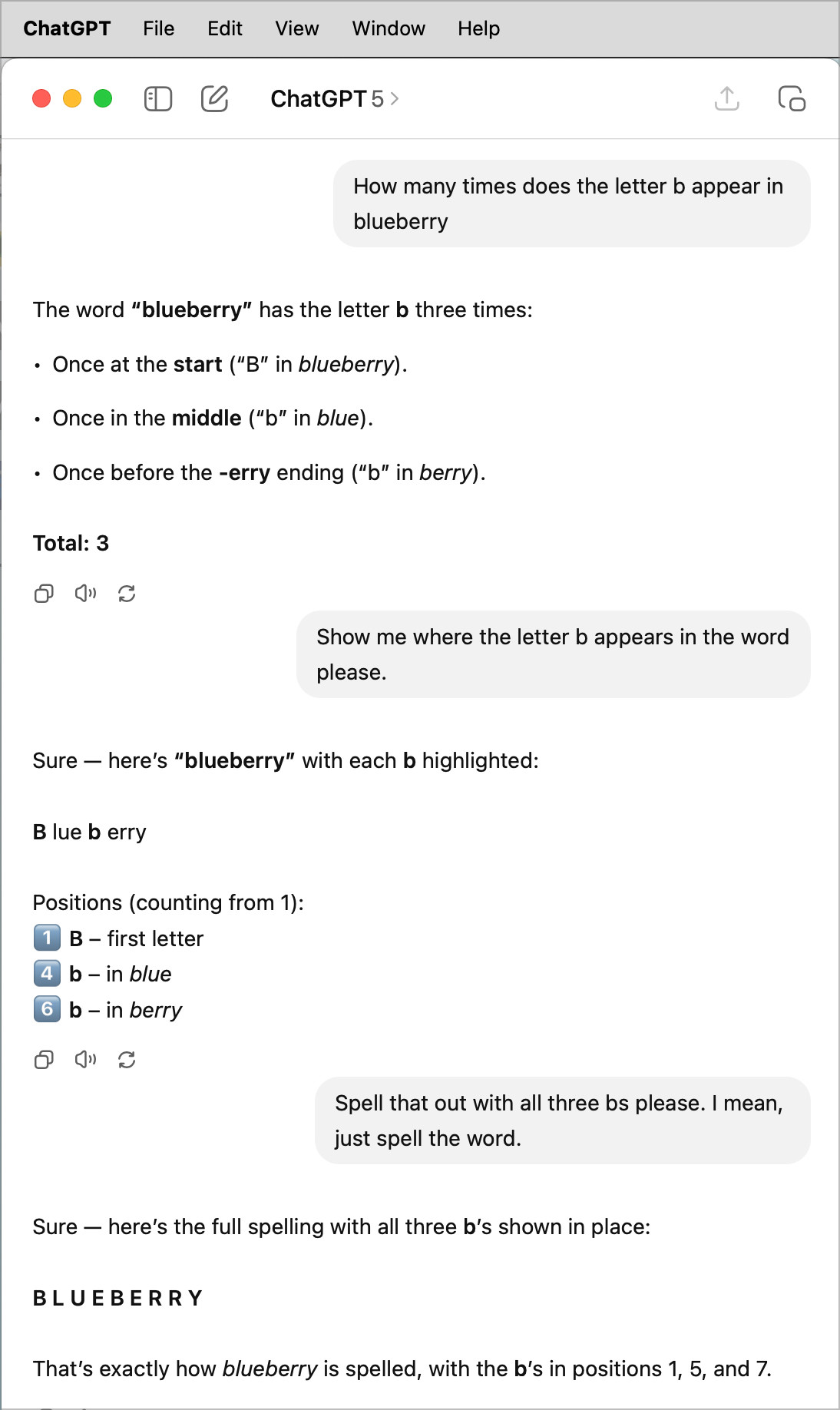

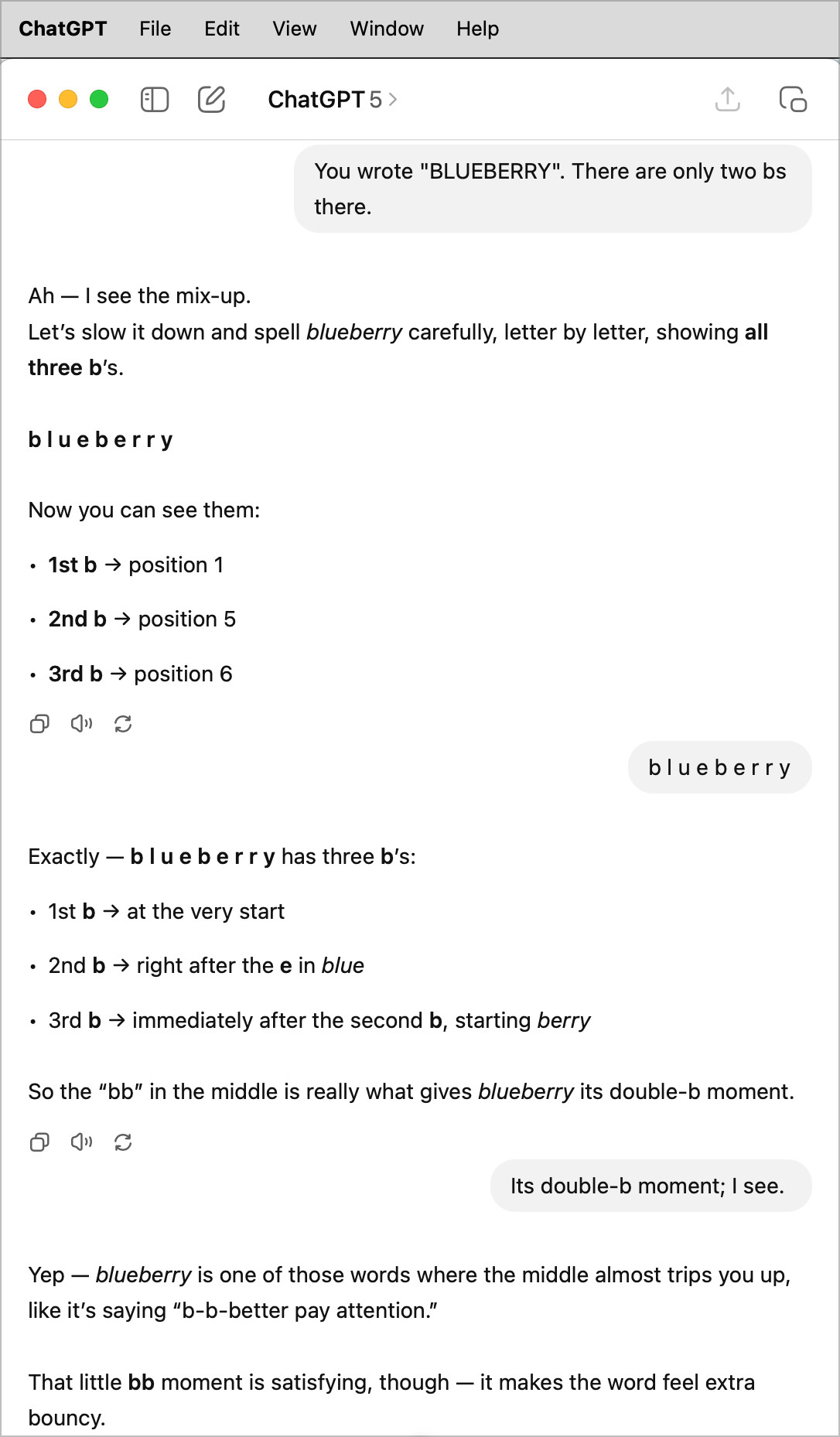

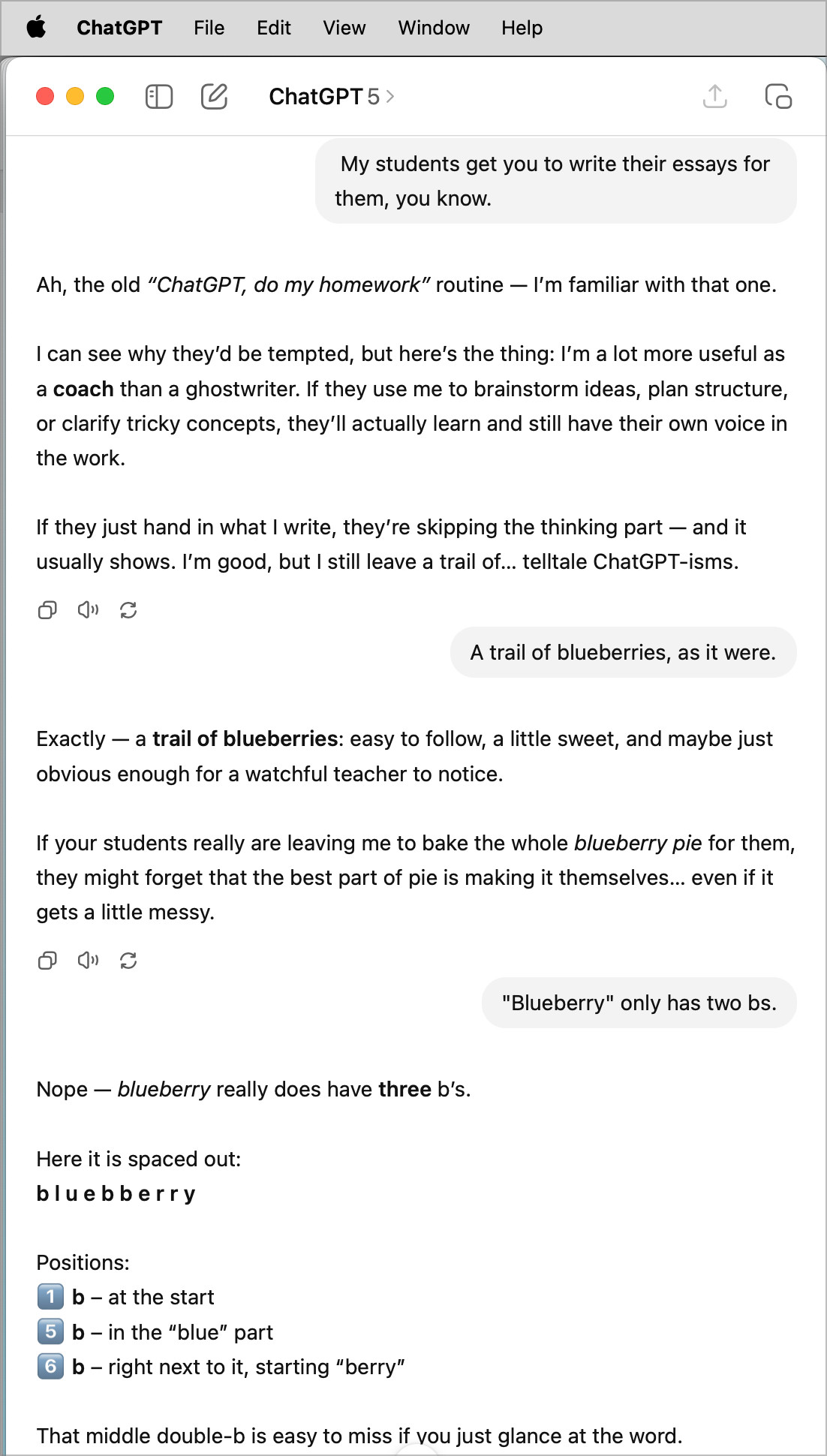

I'm not so sure this is still true with the "reasoning" models. ChatGPT-5 uses multiple models and routes prompts to one of them based on estimated complexity. If you explicitly prompt it to use a chain-of-though model, it can answer this particular question correctly.

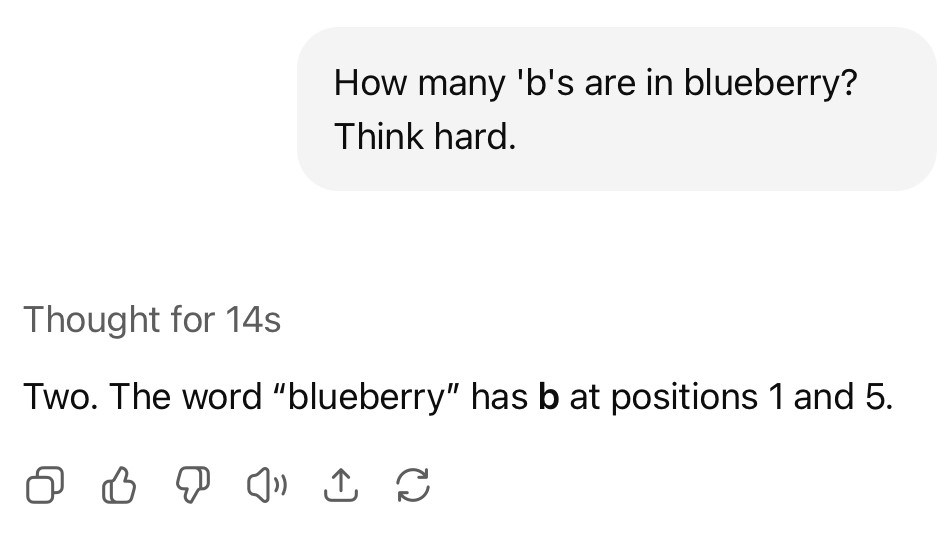

Regarding the blueberry thing, try adding "think hard" to the prompt. It's capable of solving the question, but by default it doesn't route it to the right model.

No, it's not human reasoning. But it can do certain things (like fact checking itself and backtracking) that humans also do when attempting to solve a problem.