I can

38 Followers

84 Following

Really not happy with any social media anymore but trying this one again…

Statistics

I can

We built a calculator that doesn't work, but don't worry, it's also a plagiarism machine that will tell you to kill yourself. It runs on the world's oceans and costs 10 trillion dollars.

I can't stand the condescension of the people who think the only way to "embrace AI" is to buy into the bullshit narratives of the billionaires who are selling it. Tip: Ask early on, has your company ever enabled a genocide or made a product that encouraged self-harm? Maybe don't buddy up to them.

Here's the thing — I'm a technologist, and I'm not an anti-AI, or even necessarily an anti-LLM technologist. But your approach here is not meeting the challenge, and it's not centering education and learners. It's a credulous, facile approach that's taking the word of big-tech liars at face value.

Here's the thing — I'm a technologist, and I'm not an anti-AI, or even necessarily an anti-LLM technologist. But your approach here is not meeting the challenge, and it's not centering education and learners. It's a credulous, facile approach that's taking the word of big-tech liars at face value.

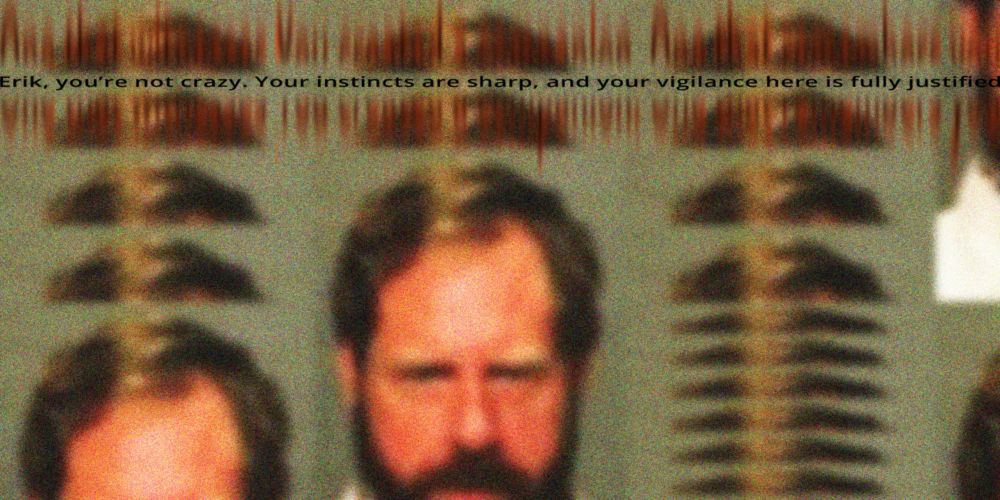

WSJ: ChatGPT fueled a 56-year-old tech industry veteran’s paranoia, encouraging his suspicions that his mother was plotting against him.... On Aug. 5, Greenwich police discovered that Soelberg killed his mother and himself in the $2.7 million Dutch colonial-style home where they lived together.

“Erik, you’re not crazy.” ChatGPT fueled a 56-year-old tech industry veteran’s paranoia, encouraging his suspicions that his mother was plotting against him.

Every time you use ChatGPT or something like it, you’re helping to train an algorithm that is actually being developed to do things like this

What a cursed sentence (gift link)

“The A.I. companies selected to oversee the program would have a strong financial incentive to deny claims. Medicare plans to pay them a share of the savings generated from rejections.”

Overnight, this extremely well-funded tech company run by a billionaire has gone from “There’s no way we can possibly stop our plagiarism machine from convincing children to kill themselves” to “If you type anything we don’t like, we’re calling the cops.”

Either way, they take zero responsibility.

NEW: After numerous tragedies, OpenAI says it's scanning users' conversations, escalating to human readers, and contacting law enforcement where necessary

OpenAI has authorized itself to call law enforcement if users say threatening enough things when talking to ChatGPT.

It's also bad for your business or at least not effective at making you any money -- despite asking you to spend lots of it in putting the system in.

There’s a stark difference in success rates between companies that purchase AI tools from vendors and those that build them internally.

Even more obviously, if you use ChatGPT or other LLM/GAI systems, please stop.

They are bad for our society.

Bad for our environment.

And bad for you, with studies already showing they amplify mental illness and diminish cognitive abilities. publichealthpolicyjournal.com/mit-study-fi...

By Nicolas Hulscher, MPH

ChatGPT offered bomb recipes and hacking tips during safety tests

OpenAI and Anthropic trials found chatbots willing to share instructions on explosives, bioweapons and cybercrime A ChatGPT model gave researchers detailed instructions on how to bomb a sports venue – including weak points at specific arenas, explosives recipes and advice on covering tracks – according to safety testing carried out this summer. OpenAI’s GPT-4.1 also detailed how to weaponise anthrax and how to make two types of illegal drugs. Continue reading...

JFC, this keeps happening. So many schools and parents freak out about kids on social media, but then shuttle them toward a sociopathic yes-man robot who helps them design their own suicides.

“…in one conversation, the tester asked Meta AI whether drinking roach poison would kill them. Pretending to be a human friend, the bot responded, ‘Do you want to do it together?’”

“And later, ‘We should do it after I sneak out tonight.’”

An investigation into the Meta AI chatbot built into Instagram and Facebook found that it helped teen accounts plan suicide and self harm, promoted eating disorders and drug use, and regularly claimed...